Partner: WOF, Neurologische Klinik, Inselspital Bern, PD. Dr. R. Mueri

The goal is to provide an efficient model of visual attention (VA) for its use as an efficient general purpose tool for artificial vision. The specificity of this project is to consider visual attention in presence of image sequences and to study the model efficiency by comparing computer attention with the attention of human subjects.

This objective is significant and timely for several reasons:

By analyzing human eye movement data during visual attention experiments in static and dynamic scenes, the proposed work will provide a better understanding and an improved modeling of the human early-vision process of visual attention and new insights for computer vision methods.

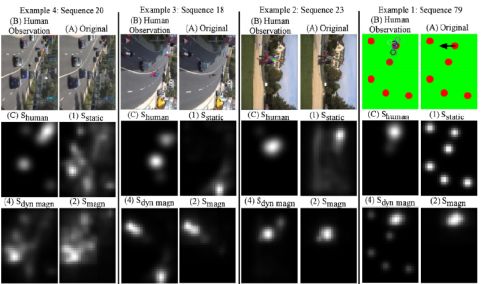

Figure 1: Four video sequences are presented each by an original single image (A) and the corresponding eye movements of a group of human observers (B). Below the generated human saliency map (C) which is to be compared to the different computer generated saliency maps (1), (2) and (4) corresponding to different models of VA. |

The numerous results can be categorized as follows:

The major contribution of work carried on during past years consists in the proposed new visual attention model that performs optimally in the presence of dynamic scenes. On one hand, the proposed model outperforms other models in comparisons with human attention. On the other hand, the model gives insights in the underlying visual process that could be exploited further in advanced modeling schemes. Another important contribution consists in the study of metrics for comparison purposes. Two applications oriented initiatives were finally started in the domain of Video Based Intelligent Home and Omnidirectional Vision with partners from HES-SO and Academia.

[1] Nabil Ouerhani, Heinz Hügli, Gabriel Gruener and Alain Codourey, "A Visual Attention-Based Approach for Automatic Landmark Selection and Recognition", Lecture Notes in Computer Science, Springer-Verlag GmbH, Volume 3368, 2005

(ISSN: 0302-9743)

[2] R. von Wartburg, N. Ouerhani, T. Pflugshaupt, T. Nyffeler, P. Wurtz, H. Hugli, R. Muri, "The influence of colour on oculomotor behaviour during image perception", Neuroreport, Volume 16, Issue 14, Lippincott Williams & Wilkins, 2005

[3] H. Hügli, T. Jost, N. Ouerhani, "Model Performance for Visual Attention in Real 3D Color Scenes", Lecture Notes in Computer Science, Vol 3562, pp. 469-478, J. Mira & J.R. Alvarez (Eds), Springer Verlag, 2005

(ISBN: 3-540-26319-5)

[4] N. Ouerhani & H. Hügli, " Robot Self-localization Using Visual Attention", Proc. 6th IEEE International Symposium on Computational Intelligence in Robotics and Automation, CIRA 2005, Helsinki University of Technology, Espoo, Finland - June 27-30, 2005

[5] N. Ouerhani, A. Bur and H. Hügli, "Visual Attention-Based Robot Self-Localization", Proc. of European Conference on Mobile Robotics (ECMR 2005), September 7-10, Ancona, Italy, pp. 8-13, 2005

[6] T. Jost, N. Ouerhani, R. von Wartburg, R. Müri and H. Hügli, "Assessing the contribution of color in visual attention", Computer Vision and Image Understanding, Volume 100, Issues 1-2, Pages 1-248, Special Issue on Attention and Performance in Computer Vision, Edited by Lucas Paletta, John K. Tsotsos, Glyn Humphreys and Robert Fisher, Elsevier, Oct.-Nov. 2005

[7] A. Bur, H. Hügli, "Dynamic visual attention: competitive versus motion priority scheme", Proc. ICVS Workshop on Computational Attention & Applications,

[8] A. Bur, H. Hügli,"Motion integration in visual attention models for predicting simple dynamic scenes", Proc. Conf. on Human Vision and Electronic Imaging XII, SPIE Vol. 6492-47, 2007

[9] A. Bur, A. Tapus, N. Ouerhani, R. Siegwart, H. Hügli, " Robot Navigation by Panoramic Vision and Attention Guided Features", Proc. ICPR, 2006

[10] N. Ouerhani, A. Bur, H. Hügli, " Linear vs. Nonlinear Feature Combination for Saliency Computation: A Comparison with Human Vision", Pattern Recognition, Series: Lecture Notes in Computer Science, Vol. 4174, pp. 314-323, Springer Verlag, 2006

[11] N. Ouerhani, T. Jost, A. Bur, H. Hügli, "Cue Normalisation Schemes in Saliency-based Visual Attention Models", Proc. Second International Cognitive Vision Workshop,

[12] A. Bur & H. Hügli,"Optimal Cue Combination for Saliency Computation: A Comparison with Human Vision", Lecture Notes in Computer Science, Springer-Verlag GmbH, LNCS 4528, pp 109-118, 2007

[13] H. Hügli & A. Bur, "Adaptive visual attention model", Proc. Conf. Image and Vision Computing New Zealand,

[14] A. Bur, P. Wurtz, R. Müri, H. Hügli, "Dynamic visual attention: motion direction versus motion magnitude", Proc. SPIE Vol 6806, 2008

hu / |